I. Introduction

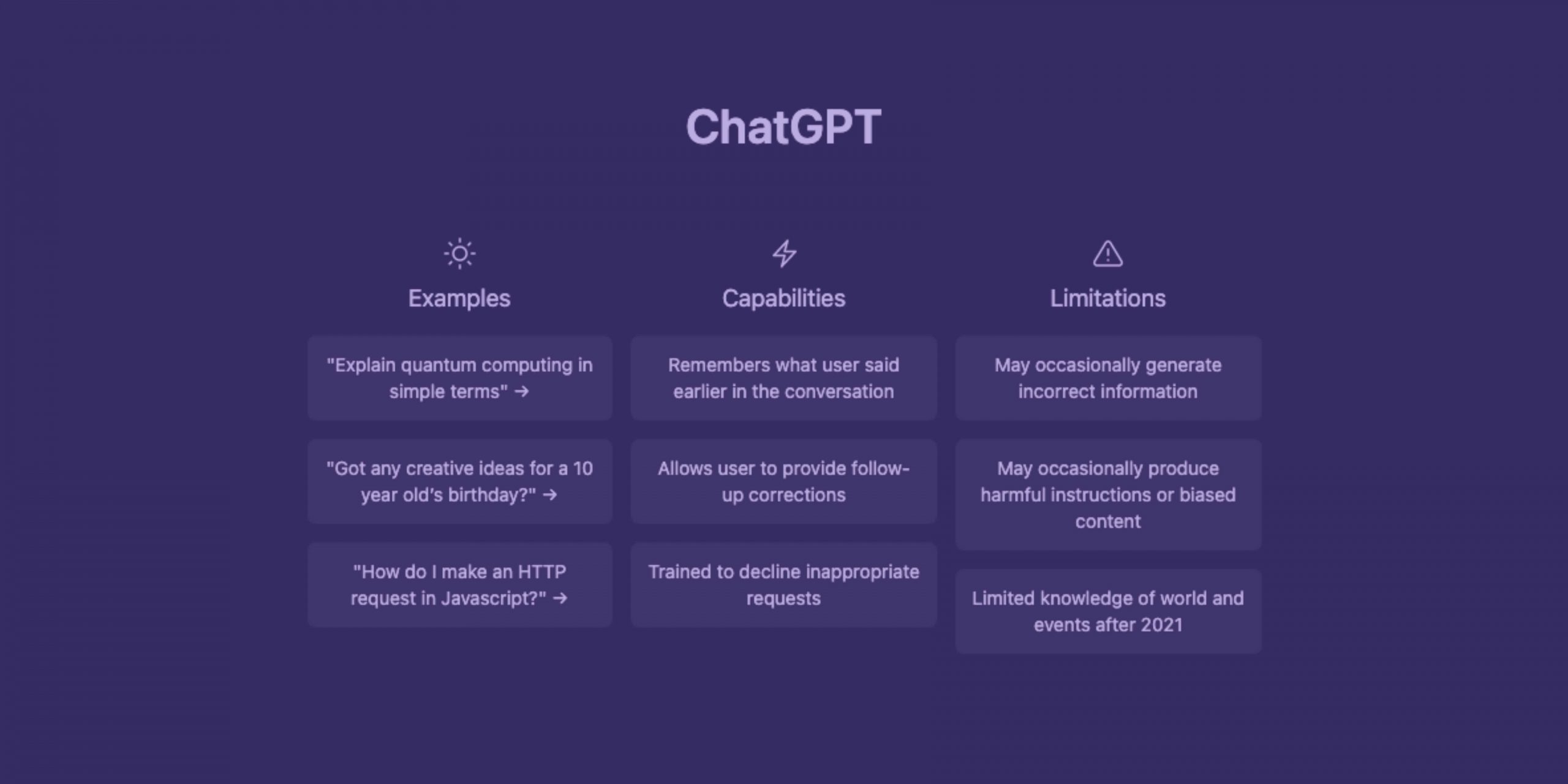

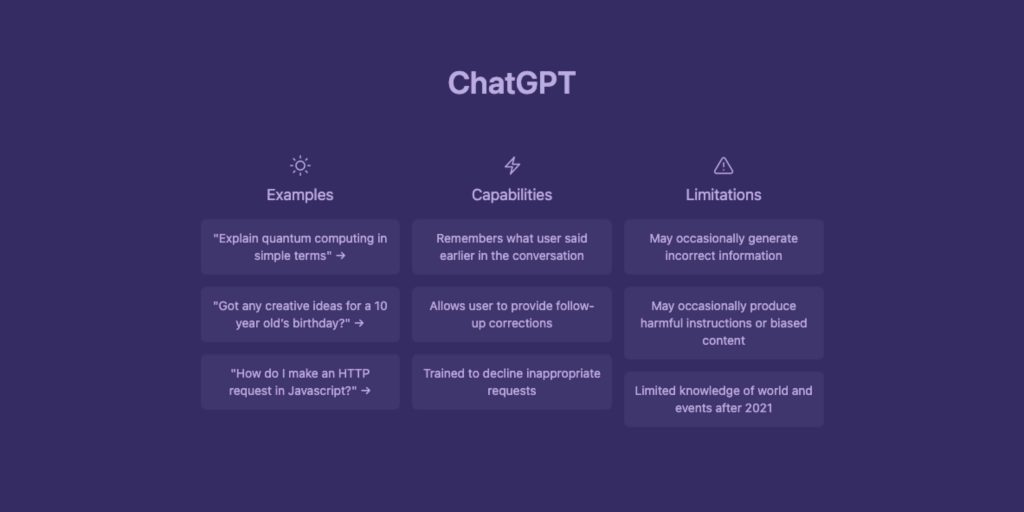

ChatGPT is a language model developed by OpenAI that is capable of generating human-like text based on its training on a wide range of topics. It can be used for a wide range of applications including chatbots, content creation, language translation and question answering.

We want to show you the basics of ChatGPT and its capabilities, as well as advanced techniques for using it effectively. The aim of this course is to give you a comprehensive understanding of ChatGPT and to equip you with the knowledge and skills to use it in your own projects.

By the end of this course, you will have a solid understanding of how ChatGPT functions, how to work with the tool and how to use the features to suit your particular needs. There’s something for you in this course, whether you’re an individual looking to automate tasks, an organisation looking to improve customer engagement, or a researcher exploring new frontiers in speech technology.

II. Understanding ChatGPT and its Architecture

In this module, we will dive into the details of ChatGPT and its underlying architecture. You will learn how the AI model processes and generates text and the role of the training data in fine-tuning the model.

Understanding the underlying AI model and its architecture:

ChatGPT is a state-of-the-art language model developed by OpenAI. It uses a transformer-based architecture that allows it to handle long-term dependencies and process text in a more human-like manner. You will learn about the different components of the architecture and how they contribute to the overall functionality of the model.

How ChatGPT processes and generates text:

You will learn about the process of text generation in ChatGPT and how it uses the transformer architecture to understand the context and generate a response. You will also learn about the different types of inputs and outputs used in the model and how they are processed.

Explanation of the training data used to fine-tune the model:

ChatGPT is fine-tuned on large datasets to better understand the language and generate more accurate responses. You will learn about the types of datasets used to fine-tune the model and the role they play in improving its performance.

By the end of this module, you will have a comprehensive understanding of ChatGPT and its architecture, and how it processes and generates text.

Understanding the Underlying AI Model and its Architecture

ChatGPT is an instance of a larger class of models known as Transformer-based language models. These models are based on the Transformer architecture, which was introduced in 2017 by Vaswani et al. in the paper “Attention is All You Need”. Transformer-based language models have since revolutionized the field of natural language processing and have proven to be highly effective in tasks such as language translation, text generation, and text classification.

The Transformer architecture consists of a series of interconnected layers, including the input layer, the attention mechanism layer, the feed-forward neural network layer, and the output layer.

The input layer takes in a sequence of words and converts them into numerical representations, known as word embeddings. The attention mechanism layer performs a complex calculation that assigns a weight to each word in the input sequence. These weights determine the importance of each word in the final output.

The feed-forward neural network layer takes the weighted input and processes it further to produce a dense representation of the input sequence. The output layer takes the dense representation and generates a probability distribution over the possible outputs. The final output is selected based on the highest probability.

ChatGPT is fine-tuned on a massive corpus of text data, such as books, articles, and social media posts. This fine-tuning process involves adjusting the model’s parameters to better fit the specific task at hand. In the case of ChatGPT, the fine-tuning process is designed to improve the model’s ability to generate coherent and relevant responses to a wide variety of questions and prompts.

How ChatGPT processes and generates text

ChatGPT is a type of language model developed by OpenAI that uses the Transformer architecture, which is a deep neural network trained on a large corpus of text data. The model processes an input sequence of tokens (e.g. words or subwords) and generates an output sequence of tokens, one token at a time.

At each step, the model generates a probability distribution over the vocabulary for the next token, given the previous tokens in the input and the generated output so far. The token with the highest probability is then selected as the output for that step, and the process continues until a stopping criterion is reached (e.g. a special end-of-sequence token is generated, or a maximum length is reached).

The training data used to fine-tune a language model such as ChatGPT is a critical factor in determining the quality and functionality of the model. The training data consists of a large corpus of text, which the model uses to learn patterns and relationships between words and phrases and to develop an understanding of the structure and meaning of language.

The size and diversity of the training data are important factors that influence the performance of the model. Larger training datasets allow the model to learn a greater variety of language patterns and relationships, making it more robust and capable of handling a wider range of input. On the other hand, training on a small or narrow dataset can result in a model that is biased towards the language and content found in that dataset, and may not perform well on other types of text.

The training data should also be carefully curated to ensure that it is high-quality and free of errors, biases, or offensive content. This can involve pre-processing the text to remove any irrelevant or harmful information, or to ensure that the text is properly formatted and consistent in style and tone.

Once the training data has been prepared, the model is fine-tuned by training it on this specific data. This fine-tuning process adjusts the model’s parameters to optimize its performance for the task and domain represented by the training data. The quality of the fine-tuned model will depend on the size, diversity, and quality of the training data, as well as the choice of the model architecture and the training process used.

III. Getting Started with ChatGPT

Overview of Different Interfaces and Platforms to Access ChatGPT

Here is an overview of some of the most popular options available.

- OpenAI API: The OpenAI API provides access to ChatGPT and other OpenAI models. It allows you to integrate the models into your own applications and services, and to generate text based on a given prompt. The API is easy to use and provides a range of customization options and advanced features.

- OpenAI Playground: The OpenAI Playground is a web-based interface that provides a simple and intuitive way to interact with ChatGPT. You can type in a prompt, and the model will generate a response. The Playground provides real-time updates and allows you to see how the model is learning over time.

- GitHub Repositories: There are many GitHub repositories that provide access to ChatGPT, either as a pre-trained model or as source code that you can use to train your own model. Some of these repositories also provide tools and libraries that make it easier to integrate ChatGPT into your applications and services.

- Third-party Applications: There are various third-party applications that provide access to ChatGPT, either through the OpenAI API or by using pre-trained models. These applications range from simple chatbots and virtual assistants to more complex tools that leverage the power of ChatGPT to generate content, perform sentiment analysis, or provide language translation.

There are many options available for accessing ChatGPT, each with its own strengths and weaknesses. Whether you are looking for a simple interface for generating text, or a powerful API for integrating ChatGPT into your own applications and services, there is likely to be an option that is right for you.

Setting Up an Account and Accessing the API

We will guide you through the process of setting up an account and accessing the OpenAI API, which provides access to ChatGPT and other OpenAI models.

- Sign Up: The first step is to sign up for an OpenAI account. You can do this by visiting the OpenAI website and creating a new account. Once you have signed up, you will be taken to the OpenAI Dashboard, where you can manage your account and access the API.

- Get an API Key: In order to access the OpenAI API, you need to obtain an API key. You can do this by visiting the API section of the OpenAI Dashboard, and clicking the “Get API Key” button. Once you have obtained your API key, you can use it to make API requests and generate text using ChatGPT.

- Test the API: Once you have obtained your API key, you can test the API by sending a simple request. For example, you can use the curl command line tool to send a request to the API, and see the response generated by ChatGPT.

- Integrate the API: Once you have tested the API and confirmed that it is working as expected, you can start integrating it into your own applications and services. You can do this by using one of the many libraries and tools available, or by writing your own code to make API requests and process the response generated by ChatGPT.

Basic Usage and Interaction with ChatGPT

Let’s explain the basic usage and interaction with ChatGPT.

Interfacing with ChatGPT:

ChatGPT can be accessed through various interfaces and platforms, including the OpenAI API, OpenAI Playground, GitHub repositories, and third-party applications. Depending on the platform or interface you choose, you may need to obtain an API key, sign up for an account, or install software or libraries.

Prompting ChatGPT:

To generate text with ChatGPT, you need to provide it with a prompt. A prompt is a short piece of text that the model uses as the starting point for generating its response. The prompt should be related to the topic you want to generate text about and can be as simple or as complex as you like.

You can use the following prompts to start with:

- “Generate a list of related keywords for [topic]”

- “Identify long-tail keywords for [topic] content optimization”

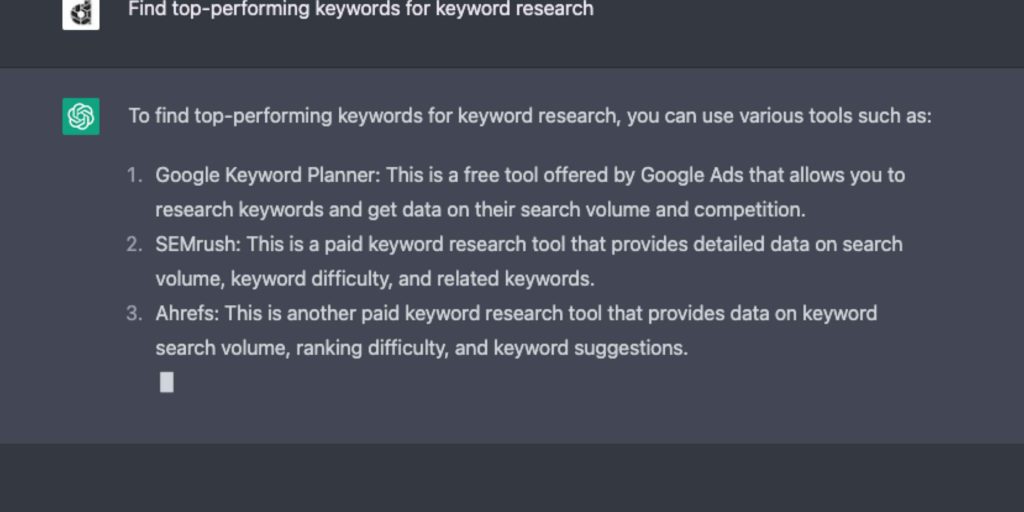

- “Find top-performing keywords for [topic]”

- “Create meta descriptions and title tags for [topic]”

- “Find opportunities for internal linking related to [topic]”

- “Generate ideas for blog posts and article topics on [topic]”

- “Research industry-specific terminology for use in [topic] content”

- “Find authoritative websites to acquire backlinks for [topic] content”

- “Generate a list of LSI keywords for [topic]”

- “Create an XML sitemap example related to [topic]”

- “Research the best meta tags for [topic]”

- “Find keywords with low competition for [topic]”

- “Create a list of synonyms for [topic] keywords”

- “Research the best internal linking structure for [topic] content”

- “Generate a list of questions people ask about [topic]”

- “Create a list of the best alt tags for images related to [topic]”

- “Create a list of related subtopics for [topic]”

- “Find the best time to publish content related to [topic]”

- “Research the best external linking strategies for [topic]”

- “Find the most popular tools used for [topic] SEO”

- “Create a list of potential influencers for [topic]”

- “Research the best schema markup for [topic]”

- “Find the best header tags for [topic] content”

- “Create a list of potential link-building opportunities for [topic]”

- “Research the best anchor text for [topic] backlinks”

- “Find the best keywords for [topic] PPC campaigns”

- “Create a list of potential guest blogging opportunities for [topic]”

- “Research the best local SEO strategies for [topic]”

- “Find the best keywords for [topic] voice search optimization”

- “Research the best analytics tools for [topic] website performance”

- “List the best keywords for [topic] featured snippets”

- “Create a list of potential partnerships for [topic]”

- “Research the best tactics for [topic] mobile optimization”

- “Find the best keywords for [topic] video optimization”

- “Research the best tactics for [topic] e-commerce optimization. Provide keyword clusters.”

- “Find the best keywords for [topic]”

- “Create a list of potential affiliate marketing opportunities for [topic]”

- “What are the best affiliate marketing websites for [topic]”

- “What are the best tactics for [topic] international SEO”

- “Find the best keywords for [topic] AMP optimization”

- “Create a list of potential podcast or podcast guest opportunities for [topic]”

- “Research the best tactics for [topic] Google My Business optimization”

- “Find the best keywords for [topic] social media optimization”

- “Find popular content topics related to [topic]”

- “Research the best SEO tactics for [topic] and provide actionable steps”

- “Create a list of potential video series or webinar ideas related to [topic]”

- “Research competitor strategies related to [topic]”

- “Find canonical tag examples related to [topic]”

- “Create an example keyword list targeting multiple geographic locations for [topic]”

- “Generate keyword ideas targeting different stages of the customer purchase funnel for [topic]”

- “Identify industry hashtags related to [topic].”

Generating Text:

Once you have provided ChatGPT with a prompt, it will generate a response. The response is generated based on the context provided by the prompt and is designed to be coherent and contextually relevant. The quality and length of the response will depend on various factors, such as the complexity of the prompt, the model configuration, and the available computing resources.

Customizing ChatGPT:

Depending on the platform or interface you use to access ChatGPT, you may be able to customize the model in various ways. For example, you may be able to set the temperature or control the length and detail of the response generated by the model. By customizing the model, you can fine-tune it to better meet your specific needs and requirements.

IV. Customizing and Fine-Tuning ChatGPT

Understanding How to Fine-tune ChatGPT for Specific Use-Cases

While the model is pre-trained on a large corpus of diverse text, it may not be perfectly suited for every use case. Fine-tuning the model allows you to make it more specialized for your specific needs and requirements, which can result in improved accuracy, relevance, and coherence.

Fine-Tuning Approach: Fine-tuning ChatGPT involves training the model on a smaller, more targeted corpus of text. This can be achieved by using transfer learning, where the model is initialized with the pre-trained weights and then fine-tuned on your custom data. This process allows the model to learn from the new data and adjust its parameters to better fit your use case.

Choosing a Fine-Tuning Corpus: When fine-tuning ChatGPT, it is important to choose a corpus of text that is relevant to your use case. The corpus should be large enough to provide the model with enough data to learn from, but not so large that it becomes computationally infeasible. A good starting point is to use a corpus of several thousand examples.

Evaluating the Results: After fine-tuning ChatGPT, it is important to evaluate the results to ensure that the model is performing as expected. This can be done by comparing the generated text to a set of human-generated reference text, or by using metrics such as BLEU, ROUGE, or perplexity.

Now you can make it more specialized for your needs and requirements, and achieve your desired outcomes.

How to Train the Model with Custom Data

In some situations, it may be necessary to train the model with custom data in order to achieve the desired outcomes.

Preparing the Data:

Before you can train ChatGPT with custom data, you need to prepare the data. This involves cleaning, pre-processing, and formatting the data so that it is suitable for training. It is important to ensure that the data is relevant to your use case, and that it provides a representative sample of the text that you want the model to generate.

Setting Up the Training Environment:

Once the data is prepared, you need to set up the training environment. This involves installing the necessary software and libraries and setting up the computing resources required for training. Depending on the size of your data, you may need to use a GPU or a cloud-based solution in order to achieve efficient training.

Training the Model:

After setting up the training environment, you can begin training the model with your custom data. This involves defining the model architecture, setting the hyperparameters, and running the training process. The length of the training process will depend on various factors, such as the size of your data, the complexity of the model architecture, and the computing resources available.

Evaluating the Results:

After the training process is complete, it is important to evaluate the results to ensure that the model is performing as expected. This can be done by comparing the generated text to a set of human-generated reference text, or by using metrics such as BLEU, ROUGE, or perplexity.

Fine-Tuning the Model:

If the results are not as expected, you may need to fine-tune the model by adjusting the hyperparameters, changing the model architecture, or using a different training corpus. Fine-tuning the model can help to improve the accuracy, relevance, and coherence of the generated text.

Explanation of the Different Parameters Used to Control the Generation Process

In order to control the generation process, it is possible to adjust various parameters that affect the behaviour of the model.

Temperature:

The temperature parameter controls the diversity of the generated text. A high temperature results in more diverse and unpredictable text, while a low temperature results in more conservative and predictable text.

Top-k Sampling:

The top-k sampling parameter controls the number of next-word candidates that are considered by the model during the generation process. A high top-k value results in more diverse text, while a low top-k value results in more conservative text.

Top-p Sampling:

The top-p sampling parameter controls the probability of selecting the next word based on the probabilities assigned by the model. A high top-p value results in more diverse text, while a low top-p value results in more conservative text.

Sequence Length:

The sequence length parameter controls the maximum length of the generated text. A high sequence length results in longer text, while a low sequence length results in shorter text.

Number of Responses:

The number of responses parameter controls the number of responses generated by the model for a given prompt. A high number of responses results in more text, while a low number of responses results in less text.

V. Advanced Usage and Applications

Overview of Common Use-Cases for ChatGPT

Here are some of the common use cases for ChatGPT.

- Language Generation: One of the most common use cases for ChatGPT is language generation, where the model is used to generate text based on a given prompt. This can be useful for a variety of applications, such as creative writing, news articles, product descriptions, and more.

- Conversation: Another common use case for ChatGPT is in building conversational agents, such as chatbots, that can respond to user input in a natural and human-like manner. ChatGPT can be used to generate responses to user questions, making it possible to build interactive conversational systems.

- Summarization: ChatGPT can also be used for summarization, where the model is used to generate a short summary of a given text. This can be useful for news articles, research papers, and other types of long-form text.

- Question Answering: ChatGPT can also be used for question answering, where the model is used to generate an answer to a user’s question based on a given text. This can be useful for a variety of applications, such as search engines, knowledge bases, and more.

ChatGPT has a wide range of applications in various domains, making it a versatile and powerful tool for language generation. Whether you are looking to build a chatbot, generate creative writing, or summarize text, ChatGPT can be a valuable tool to help you achieve your goals.

Building and Integrating ChatGPT into Various Applications and Systems

How can you build and integrate ChatGPT into your applications and systems?

API Integration:

ChatGPT is available as an API, making it possible to integrate it into your existing applications and systems. You can use the API to generate text based on a given prompt, making it a valuable tool for building conversational systems, summarization systems, and more.

In addition to API integration, you can also build custom integrations for ChatGPT to meet your specific needs and requirements. This can involve integrating the model into your existing workflows, building custom interfaces for the model, and more.

Deployment:

Once you have integrated ChatGPT into your applications and systems, it is important to deploy it in a manner that meets your needs and requirements. This can involve deploying the model on-premise, in the cloud, or using a hybrid deployment approach.

Whether you are using the API or building a custom integration, the process of integrating ChatGPT into your systems requires careful planning and consideration to ensure that it meets your needs and requirements.

Understanding How to Deal with Common Challenges and Limitations

ChatGPT is a powerful language generation model, but like any technology, it also has its challenges and limitations. In this article, we will explain how to deal with some of the common challenges and limitations when using ChatGPT.

- Bias and Fairness: One of the challenges when using ChatGPT is ensuring that the generated text is unbiased and fair. As the model is trained on a large corpus of text, it may sometimes generate text that is biased or unfair. To mitigate this issue, it is important to monitor the output of the model and make adjustments as needed to ensure that the generated text is unbiased and fair.

- Quality of Training Data: The quality of the training data used to fine-tune the model can have a significant impact on the quality of the generated text. It is important to ensure that the training data is high-quality, relevant, and diverse to produce the best results.

- Control Over Generation: Another challenge when using ChatGPT is controlling the generation process. While the model has a high level of creativity and generates text that is often coherent and well-written, it may sometimes generate text that is not relevant or appropriate. To address this issue, it is possible to control the generation process by adjusting the parameters and settings used by the model.

- Limitations of the Model: Finally, it is important to be aware of the limitations of the model and understand that it is not always possible to generate text that is perfect and error-free. The model is trained on a large corpus of text and may sometimes generate text that is grammatically incorrect, nonsensical, or irrelevant.

You now have a thorough understanding of the challenges and limitations of the model. By monitoring the output, ensuring high-quality training data, controlling the generation process, and being aware of the limitations of the model, it is possible to use ChatGPT effectively and produce high-quality text.

VI. Best Practices and Ethical Considerations

Best Practices for Working with AI Models

As AI models become more prevalent and widely used, it is important to be aware of best practices for working with these models. One of the most important considerations is data privacy and security. When working with AI models, it is important to ensure that personal and sensitive data is protected and not misused. This includes taking steps to encrypt sensitive data, store it securely, and limit access to authorized personnel only. In addition, it is important to be aware of and comply with relevant data protection and privacy regulations, such as the European Union’s General Data Protection Regulation (GDPR).

Understanding the Ethical Considerations of AI and Language Models

AI and language models raise a number of ethical considerations, including issues related to accountability, transparency, bias, and fairness. For example, it is important to ensure that AI models do not perpetuate existing biases or create new ones. In addition, it is important to ensure that AI models are transparent and that the decision-making processes behind them are understood and explained. These ethical considerations are important to ensure that AI models are used responsibly and in a way that benefits society as a whole.

The Role of ChatGPT in Shaping the Future of AI and Language Technology

ChatGPT is a powerful language model that has the potential to shape the future of AI and language technology in a number of ways. For example, it has the potential to revolutionize natural language processing and language generation, allowing for the creation of more intelligent and sophisticated language-based systems and applications. In addition, ChatGPT has the potential to improve the accuracy and effectiveness of language-based models, making them more useful and practical for a wide range of applications. As AI and language technology continue to advance, the role of ChatGPT will likely become increasingly important and its impact on these fields will continue to grow.

VII. ChatGPT Prompts

User Persona

- “What are the best ways to create user personas for a specific project?”

- “How can I effectively use user personas to guide design and development decisions?”

- “What are some best practices for creating user personas based on real user research and data?”

- “How can I use user personas to improve the usability and user-centeredness of a product or service?”

- “What are some effective ways to handle user personas for different cultures and languages?”

- “How can I use user personas to create a consistent user experience across different products or applications?”

- “What are some best practices for updating and maintaining user personas?”

- “How can I use user personas to improve accessibility and universal usability?”

- “What are some best practices for creating user scenarios and user journeys based on user personas?”

- “How can I use user personas to improve the efficiency and speed of design and development?”

- “What are some best practices for sharing and distributing user personas within a team or organization?”

- “How can I use user personas to improve collaboration and communication among designers, developers, and stakeholders?”

- “What are some best practices for creating user personas for different industries and niches?”

- “How can I use user personas to create a sense of unity and cohesiveness in a design?”

- “What are some best practices for creating user personas for a responsive and adaptive design

Market Research

- “What are the best ways to conduct primary market research to gain insights into customer needs and preferences?”

- “How can I effectively use surveys and questionnaires to gather market research data?”

- “What are some best practices for conducting focus groups and user interviews to gain insights into customer behavior and preferences?”

- “How can I use secondary market research, such as industry reports and competitor analysis, to gain a better understanding of the market?”

- “What are some effective ways to segment and target a specific market or audience for market research?”

- “How can I use online tools and platforms, such as social media and search engines, to conduct market research and gather data?”

- “What are some best practices for creating a research plan and budget for a market research project?”

- “How can I use data visualization and analytics to analyze and interpret market research data?”

- “What are some best practices for conducting international market research and understanding cultural differences in customer behavior?”

- “How can I use A/B testing and experimentation to validate market research findings and inform product development?”

- “What are some best practices for conducting research on emerging technologies and trends in the market?”

- “How can I use customer journey mapping to gain a comprehensive understanding of the customer experience?”

- “What are some best practices for conducting user research and usability testing as part of a market research project?”

- “How can I use ethnographic research to observe and study customer behavior in real-world settings?”

- “What are some best practices for using data from multiple sources and methods in a market research project?”

Qualitative User Research

- “What are the best ways to recruit participants for qualitative research?”

- “How can I design effective interview questions for qualitative research?”

- “What are some best practices for conducting user interviews?”

- “What are some common pitfalls to avoid when conducting user research?”

- “How can I effectively analyze and interpret qualitative research data?”

- “What are some best practices for conducting usability testing?”

- “What are some effective methods for gathering user feedback?”

- “How can I use ethnographic research to gain a deeper understanding of users’ behaviors and needs?”

- “How can I use user personas to inform product design and development?”

- “What are some best practices for conducting focus groups?”

- “How can I use card sorting to understand user navigation and information architecture?”

- “What are some best practices for conducting user diaries or journals?”

- “How can I use user journey mapping to understand the complete user experience?”

- “What are some best practices for conducting user co-creation workshops?”

- “How can I use user testing to validate design decisions?”

- “What are some best practices for conducting remote user research?”

- “How can I use data visualization to present user research findings?”

- “What are some best practices for conducting user research with diverse populations?”

- “How can I use user research to inform product roadmap decisions?”

UX Workshop

- “What are the best ways to plan and organize a UX workshop for a specific project?”

- “How can I effectively facilitate a UX workshop with a team?”

- “What are some best practices for creating a workshop agenda and defining workshop goals?”

- “How can I use brainstorming and problem-solving techniques during a UX workshop?”

- “What are some effective ways to handle stakeholder involvement and feedback during a UX workshop?”

- “How can I use a UX workshop to quickly validate product ideas and solve user problems?”

- “What are some best practices for selecting the right team members for a UX workshop?”

- “How can I use a UX workshop to align on a common vision and direction for a project?”

- “What are some best practices for conducting user research and testing during a UX workshop?”

- “How can I use a UX workshop to identify and prioritize key features and requirements for a product?”

- “What are some best practices for conducting usability testing during a UX workshop?”

- “How can I use a UX workshop to generate new ideas and solutions for a project?”

- “What are some best practices for conducting a UX workshop with remote or distributed teams?”

- “How can I use a UX workshop to create a shared understanding and alignment among team members?”

- “What are some best practices for conducting a UX workshop with a tight deadline or limited resources?”

KPIs and Metrics

- How can I establish clear and measurable goals for my digital product?

- How can I track user engagement and retention for my digital product?

- What metrics should I use to measure the success of my digital product?

- How can I set up a system for monitoring and analyzing user behavior on my digital product?

- How can I use data to make informed decisions about my digital product?

- How can I measure the ROI of my digital product?

- What are some best practices for collecting and analyzing user feedback?

- How can I use analytics to identify areas for improvement in my digital product?

- How can I use metrics to identify and prioritize features for my digital product?

- How can I use key performance indicators (KPIs) to measure the effectiveness of my digital product?

- How can I use heat maps to identify areas of high user engagement on my digital product?

- How can I use user testing to validate key performance indicators for my digital product?

- How can I use data to identify patterns and trends in user behavior on my digital product?

- How can I use metrics to track the performance of my digital product over time?

Psychology

- How can we use psychological principles to create a more engaging user experience?

- What are some psychological triggers that can be used to increase user engagement?

- How can we use psychology to design more persuasive digital products?

- How can we use cognitive science to improve the usability of our digital products?

- How can we use psychology to design more effective forms and surveys for digital products?

- How can we use psychology to create more effective onboarding experiences for digital products?

- How can we use psychology to design more effective error messages and notifications for digital products?

- How can we use psychology to create more effective calls to action for digital products?

- How can we use psychology to design more effective search functionality for digital products?

- How can we use psychology to design more effective navigation for digital products?

- How can we use psychology to design more effective content for digital products?

- How can we use psychology to design more effective social features for digital products?

- How can we use psychology to design more effective gamification for digital products?

- How can we use psychology to design more effective personalization for digital products?

- How can we use psychology to design more effective recommendations for digital products?

- How can we use psychology to design more effective email marketing for digital products?

- How can we use psychology to design more effective push notifications for digital products?

- How can we use psychology to design more effective in-app messaging for digital products?

- How can we use psychology to design more effective customer service for digital products?

- How can we use psychology to design more effective post-purchase experiences for digital products?

- What are some best practices for using cognitive load theory in UX design?

- How can I use the principles of perception in digital product design?

- What are some best practices for using memory principles in digital product design?

- How can I use the principles of attention in digital product design?

- What are some best practices for using the principles of decision-making in digital product design?

- How can I use the principles of motivation in digital product design?

- What are some best practices for using the principles of problem-solving in digital product design?

- How can I use the principles of heuristics in digital product design?

- What are some best practices for using the principles of mental models in digital product design?

- How can we apply theory of mind principles to design more user-centered digital products?

- What are some best practices for incorporating theory of mind into user research and testing?

- How can we use theory of mind to improve the usability and user experience of digital products?

- What are some common misconceptions about theory of mind in digital product design?

- “Write a marketing campaign outline using the ‘Reciprocity Bias’ framework to create a sense of obligation in [ideal customer persona] to try our [product/service]. Include value-adds or bonuses, and encourage reciprocity by asking for a favor or action in return.”

- “Using the ‘Attribution Bias’ framework, please write a marketing campaign outline that attributes the successes or failures of our [product/service] to internal factors. Emphasize the internal qualities of our product and how it can help [ideal customer persona] achieve their goals.”

- “Write a marketing campaign outline using the ‘Anchoring Bias’ framework to shape the perceptions of [ideal customer persona] about our [product/service]. Highlight the most important or relevant information first, and use this information as an anchor to influence their decisions.”

- “Using the ‘Self-Handicapping’ framework, please write a marketing campaign outline that addresses potential obstacles or doubts [ideal customer persona] may have about using our [product/service]. Offer support and resources to help them overcome these challenges, and emphasize the internal qualities of our product that can help them achieve their goals.”

- “Write a marketing campaign outline using the ‘Confirmation Bias’ framework to appeal to the [ideal customer persona]’s preexisting beliefs about [subject]. Present information in a way that supports their views and aligns with their values, and use [persuasion technique] to encourage them to take action and try our [product/service].”

- “Write a marketing campaign outline using the ‘Self-Serve Bias’ framework to highlight the successes people can achieve with our [product/service] and downplay the role of external factors in the outcomes. Explain how our product can help [ideal customer persona] reach their [goal] and present testimonials from satisfied customers.”

- “Using the ‘Social Comparison’ framework, please write a marketing campaign outline that highlights the successes of others using our [product/service] and how it can help [ideal customer persona] achieve similar results. Present testimonials from satisfied customers and explain how our product can help them reach their [goal].”

Recap of the key learning outcomes and takeaways

Have you ever wished for an AI language model that could help you create high-quality content for a variety of applications? Look no further than ChatGPT, developed by OpenAI. This cutting-edge model is trained on a massive corpus of text, enabling it to generate text that is both coherent and relevant to a wide range of topics.

But don’t be fooled by its impressive abilities. ChatGPT also comes with its own set of challenges and limitations, including the potential for bias and the quality of the training data. It’s important to be aware of these challenges and to follow best practices for data privacy and security when working with AI models like ChatGPT.

The good news is that the fine-tuning process allows you to adjust the model’s parameters and train it on specific use cases, resulting in even more relevant and high-quality output. Integrating ChatGPT into your applications and systems is also made easier with a solid understanding of how to control the generation process and overcome common challenges.

From language generation to conversation and summarization, the use cases for ChatGPT are diverse and limitless. But with great power comes great responsibility. As we continue to push the boundaries of AI and language technology, it’s crucial to remember the ethical considerations that come with such advancements. Ensure that you use ChatGPT in a responsible and transparent manner, and you’ll be well on your way to revolutionizing the content creation process.